Compatibility testing is an integral component of many quality assurance strategies, allowing companies to see if their software performs correctly across different platforms. Even for a desktop-exclusive program, there are several major operating systems to account for and hundreds – if not thousands – of hardware differences that could affect stability. Understanding the compatibility test process and its usual benefits can help guarantee an effective product launch that is able to reach the greatest possible audience of users.

Though compatibility testing can offer a number of benefits, there are also numerous significant challenges that a software testing team must overcome in order to maximize this technique’s potential. There are also specific practices these departments should employ to get the best results – and ensure comprehensive overall test coverage.

In this article, we look closely at compatibility testing, including the essential steps that teams must follow as well as the most useful testing tools currently available.

What is Compatibility Testing in

software testing & engineering?

Compatibility testing examines software across different devices, hardware, and firmware to make sure that it performs to a team’s expectations. Every user might be engaging with their program on a new device, and this makes it important that the company can guarantee they all have a similar experience. Compatibility tests, for example, can involve checking each feature of an app to make sure it works on every major operating system.

Without thorough compatibility testing, it’s entirely possible that a company might release an application that doesn’t work for certain popular devices. These checks must be fully comprehensive because a problem might arise in any number of ways – this application might not function with a very specific type of graphics card, for example. When paired alongside other forms of software testing, quality assurance teams can make sure their program is ready for release.

1. When and why do you need to do Compatibility Testing for mobile applications, websites, systems, and cross-browser?

Companies conduct compatibility testing in their software testing phase, specifically when they have a ‘stable’ version of the program which accurately reflects how it will behave for customers. This continues after alpha, acceptance, and the other forms of testing that often look for general stability and feature-related issues. If an application faces issues during the compatibility testing phase, this will usually be due to specific compatibility-related issues. Enacting these checks too early can effectively make them redundant, as minor changes later on in the program’s development cycle can radically affect compatibility.

Compatibility testing for browsers and software is important because it helps companies release an application that they know will run adequately on virtually every possible device. For example, cross-browser compatibility testing in particular helps ensure that people using Opera have the same experience as those using Firefox and other major browsers. The team usually tests as many hardware/software variations as their time, and budget, allows. This means they must intelligently prioritize systems or browsers that their customers are more likely to use, letting them guarantee broad testing coverage and a viable product.

2. When you don’t need to do software Compatibility Testing

Companies might create a bespoke application for a specific operating system or model, massively limiting the number of necessary checks. Cross-browser compatibility testing in software testing could be redundant if this program does not require a browser, for example. Time could also be a serious factor in a company’s ability to perform these tests, though testing teams should still work to guarantee that major systems and browsers are compatible with the software. There are also certain projects that cannot benefit from basic compatibility tests.

3. Who is involved in Compatibility Testing?

Here are the main people that conduct compatibility testing in software testing:

1. Developers

The development team checks the application’s performance on one platform during development and this may even be the only device that the company intends to release the program on.

2. Testers

Quality assurance teams, either within the company or externally hired, check many possible configurations as part of the application’s compatibility testing stage, including all major operating systems and browsers.

3. Customers

The company’s customers might have hardware or configurations that the team was unable to thoroughly test, potentially making their user experience the first real check of that specific setup.

Benefits of Compatibility Testing

The usual benefits of software compatibility testing include:

1. Broader audience

The more thoroughly a team tests its software, the more devices it can confidently release it for, ensuring a wide audience across many platforms are able to enjoy its application. This allows companies to gain more product sales on the program and may also improve the number of positive reviews that this software receives from users.

2. Improves stability

Compatibility testing in software testing is essential for highlighting stability and performance issues, which can often be more pronounced on different devices – especially if the developers only designed this application for one platform. A system compatibility test shows the company what users (on a wide range of devices) could expect from the software’s overall performance.

3. Refines development

These tests also have significant long-term impacts on a development team. For example, mobile compatibility testing can provide valuable information about app development that businesses might account for when they create additional programs. This may significantly lower the expenses of compatibility tests for future projects, allowing them to reuse the lessons they learn from this process.

4. Verifies other tests

Most forms of testing up to this point are limited in scope and do not test every possible hardware or software combination – these tests could effectively double-check these results. Cross-browser compatibility testing, for example, validates the pre-existing quality assurance stages by showing that the outcomes are the same when the user has a different browser.

5. Reduces costs

Compatibility testing can also lower costs for the current program, helping teams identify issues before an app enters the public release – at this point, fixing errors becomes more expensive. The more varied a team’s tests are (and the higher their test coverage rate), the cheaper it is to remove any errors as and when they emerge.

Challenges of Compatibility Testing

Here are common challenges that companies may face when they implement compatibility testing in software testing:

1. Limited time

While automation tools and other solutions can significantly speed up compatibility tests by simulating a range of devices, this process must still abide by the company’s development schedule. This means the testing team has to prioritize the most common devices and browsers in order to guarantee they receive the broadest (and most populous) audience.

2. Lack of real devices

These checks commonly involve virtual machines that simulate the components and conditions of real devices; this is much cheaper (and faster) than independently acquiring the relevant parts and platforms. However, this can affect the accuracy of these results; especially since performance often depends upon how users operate a real device.

3. Difficult to future-proof

Compatibility testing can only engage with platforms that already exist; this means they cannot guarantee that the application will run as expected on future versions of Windows and Google Chrome. Organizations are only able to fix this post-launch, which is often more expensive, and the application might eventually be obsolete as a result.

4. Infrastructure maintenance

If a team does decide to check a significant amount of platforms in-house, this may result in high infrastructure fees. Compatibility testing for mobile applications, for example, could involve sourcing a number of real mobile devices. While this is more accurate than simulated hardware compatibility testing, it is expensive and typically involves regular maintenance.

5. High number of combinations

Compatibility testing accounts for many intersecting factors, such as the operating system, browser, hardware, firmware, and even screen resolution. Even if the test team has a lot of time, it would be effectively impossible to accommodate every single possibility. Configuration and compatibility testing must again prioritize the most likely device combinations.

Characteristics of Compatibility Testing

The key characteristics of compatibility tests include:

1. Thorough

These checks must be able to isolate any possible compatibility issues that arise between devices – or the team might end up releasing a faulty program. For example, these checks must make sure every single feature of the application renders as expected, no matter the user’s screen resolution.

2. Expansive

The tests should maintain a balance of depth and breadth, helping teams investigate a number of issues across many device configurations. Cross-browser compatibility testing looks at an extensive range of OS and browser combinations, ensuring a high coverage level – sometimes with the help of an automated solution.

3. Bidirectional

This process involves both backward and forward compatibility testing; the former allows the team to see how their app will operate on older hardware. The latter lets the team access cutting-edge platforms, helping them guarantee successful long-term performance, even if their future-proofing capabilities are quite limited.

4. Repeatable

The issues that these checks uncover must be easy for other testers and departments to repeat – showing that they reflect errors that users are likely to encounter. If a website compatibility test indicates specific features aren’t functioning on a certain browser, repeatability helps developers address the problem.

Types of Compatibility Testing

The main types of compatibility testing are as follows:

1. Backward Compatibility Testing

Backward compatibility testing involves checking the app using older versions of current-day hardware – this is essential because restricting these checks to modern devices may significantly limit the number of users. Many people still make use of older operating systems, such as Windows 8, for example.

2. Forward Compatibility Testing

Forward compatibility testing is similar but instead looks at modern or upcoming technologies to see if the app is likely to keep working for years despite advancements and updates. Without these tests, the software might even stop functioning with the next browser update, for example.

3. Browser Compatibility Testing

Website browser compatibility tests ensure a web application or site can work on various browsers; this is vital as they use different layout engines. Quality assurance teams even test cross-browser compatibility – meaning they check that each browser can handle the application across separate operating systems.

4. Mobile Compatibility Testing

Testing mobile apps is a similar process to checking desktop and web applications, especially as the phone’s OS is another key consideration. Android and iOS apps, for example, come in wholly different formats and require an entirely separate development and testing process to accommodate both.

5. Hardware Compatibility Testing

These checks look at the specific components that make up the machine and how they might affect a program; this is critical for virtually any type of device. For example, a computer could have a graphics card that cannot successfully render a web application’s interface.

6. Device Compatibility Testing

Some applications connect with external devices through Bluetooth, broadband, or a wired connection. An app might need to connect with a printer, for example. These tests aim to make sure the program engages with the platform’s own connections and any devices that it can access.

7. Network Compatibility Testing

If an application requires network functionality to run – such as by connecting with an online database through the company’s server – this requires numerous compatibility checks. This ensures the program is able to run at a suitable speed with a Wi-Fi, 4G, or 3G network connection.

What do we test in Compatibility Tests?

Compatibility testers usually check the following:

1. Performance

One of the main purposes of compatibility testing is to ensure stability, as some aspects of the application may be entirely incompatible with common platforms. By looking at the overall responsiveness of this program, the testing team ensures there are no serious crashes on certain devices.

2. Functionality

Compatibility testing also checks the general features and functions of an application to ensure the software is able to provide the correct results. For example, a customer relationship management system might be unable to offer sales data or general analytics for users with an outdated operating system.

3. Graphics

Some browsers or devices may struggle to render certain graphical elements due to a number of reasons – and compatibility checks can help with this. A program might only be able to function at specific screen resolutions unless the developers change how the program displays its content.

4. Connectivity

Compatibility tests also look at how the program specifically integrates with both the user’s device and its own database, allowing it to detect devices such as printers. These checks might, for example, reveal that the app is unable to connect with its own database on 3G networks.

5. Versatility

These checks make sure that the company’s application is versatile enough to work on old and new versions of the same operating system via backward and forward compatibility tests. This ensures that users aren’t locked out from the program if their software is a few years out of date.

Types of outputs from Compatibility Tests

The three main outputs of compatibility tests are:

1. Test results

The most common output for these checks is the results themselves, which can take many forms. For example, browser compatibility testing may reveal that a web app results in a memory leak on Microsoft Edge whilst the same app has no negative effects on Chrome-based browsers. Alternatively, the application could work exactly as the team expects on the relevant platforms.

2. Test logs

The test results also manifest in the form of the application’s own logs, which highlight any discovered software issues through error messages. These logs can even identify the specific part of a program that is causing this error. For compatibility testing in particular, testers must be familiar with how these logs manifest and present these issues across different platforms.

3. Test cases

Compatibility test cases set out which tests the team will run, and offers a space for them to record the results in a simple format. The testers should use their knowledge of the software, in conjunction with the results and logs, to identify an issue’s cause. The more information they provide, the quicker the developers can start bug fixes.

Types of defects detected

through Compatibility Testing

Here are the most common errors which compatibility tests can identify:

1. Layout scaling

A website compatibility test can show if the elements that comprise a web app, or even web pages, scale to fit the user’s device, specifically the resolution and size of their screen. As a result, some graphics may be difficult to see on specific browsers.

2. Software crashes

Compatibility tests make it easier to see if an application is even able to run on some platforms. For example, a game developer could discover their product’s minimum system requirements by checking which devices crash due to insufficient RAM and processor speed upon testers launching it.

3. HTML/CSS validation issues

Different browsers and devices read code in separate ways – with some automatically correcting simple coding typos, such as not properly closing an HTML tag. Browser compatibility testing may identify instances of invalid CSS which prevents the app from generating its content and even basic functions.

4. Video playback errors

Many modern video players make use of HTML5 to stream videos online, with this potentially being a key part of a company’s web app. However, teams that check website browser compatibility might find that their app’s video features are not compatible with outdated browsers.

5. File security

Compatibility testing in software engineering can also find issues with file security and how this varies between devices. For example, newer versions of Windows have more robust input/output security. This can lead to the application (such as antivirus software) struggling to access the device’s files.

Compatibility Testing process

The usual steps of compatibility testing are:

1. Compile a test plan

A comprehensive testing plan is critical for compatibility testing; the quality assurance team can refer to this as necessary during their checks. For example, this details the devices they will test and the criteria for a pass or fail; they also must establish if they will be using robotic process automation.

2. Configure test cases

Test cases are similarly important as they elaborate on the specific compatibility checks that the teams run and the specific devices they work with. This also contains the exact steps the testers will take, and ample space for them to record the outcome and any information that will help the developers enforce compatibility.

3. Establish the testing environment

An isolated and independent test environment free of outside influences is necessary to ensure accurate tests, also letting the quality assurance team identify where the issues they uncover are coming from. On top of this, the testers can conduct their checks on the application without compromising the ‘real’ version in any way.

4. Execute the tests

With the test cases and environment fully prepared, the team can begin the compatibility tests – even with an automated solution, they only have a limited amount of time. Testers will need to prioritize the most common operating systems and device configurations to account for this, and ensure broad test coverage despite these limitations.

5. Retest

Once the tests are complete and the developers receive the test cases, they will modify the application in ways that improve its compatibility, though this may not be possible for all devices. The testers then recheck the app and verify that the issues they previously uncovered are no longer present and there are no new major errors.

Common Compatibility Testing metrics

Here are some common metrics used for compatibility tests:

1. Bandwidth

Network compatibility tests measure how the application engages with various networks, including broadband and mobile data networks. The minimum bandwidth necessary for the program to perform its usual duties and connect to the company’s database might be too high for the average 3G connection, for example.

2. CPU usage

One way that performance issues manifest themselves is through disproportionately high CPU usage – this can mean the device simply does not meet the program’s minimum requirements. CPU issues might also affect the application’s response time, limiting its functionality and causing enough lag to disengage users.

3. System Usability Scale

The System Usability Scale is a common way of measuring subjective details of a program, comprising ten basic questions about an app’s usability. The resulting SUS score is out of 100 and might differ from one platform to the next due to graphical errors.

4. Total number of defects

This metric is a constant across most testing types, letting testers understand the program’s current health. It’s also possible for the team to compare defect totals between various platforms. By doing this, the testers could highlight the errors which are due to incompatibility.

5. SUPRQ Score

Similar to an application’s SUS score, the Standardized User Experience Percentile Rank Questionnaire is a way for testers to rate an application on several key factors, including usability and appearance. This helps them to identify how customers might struggle to use the application on certain devices.

7 Mistakes & Pitfalls in Implementing Compatibility Tests

Here are seven significant mistakes to avoid when conducting compatibility testing:

1. Lack of real devices

While it would be impossible to test on every possible device combination, a testing team can still benefit from using as many real devices as they can source. Various platforms offer ‘real’ devices via cloud solutions in order to facilitate cross-browser compatibility testing in ways that can reflect native performance.

2. Avoiding older devices

Many users still access their applications on older versions of Windows or iOS; focusing wholly on new editions of popular devices and operating systems could limit a product’s reach. If the team doesn’t broaden their tests to ‘outdated’ devices, a significant amount of their audience might struggle to use the program.

3. Time mismanagement

There is often a high volume of devices and configurations that will require a compatibility test, meaning the team must manage their time to check as many of these as possible. This is important as the tests are typically still ongoing near the end of development; mismanagement could massively limit the number of checks.

4. Improper scheduling

It’s similarly paramount that teams make sure they conduct these tests at a reasonable stage in the program’s development, preferably after alpha testing and most forms of functional testing. This makes it easier to see if a problem is a general defect or specific to the devices that the team is looking at.

5. Not accounting for screen resolution

Screen resolution can be a far greater factor in compatibility than many testing teams recognize – especially as this is customizable; and impacts how a device displays graphical elements. Even with an encroaching deadline for compatibility tests, it’s vital that testing teams still work to accommodate this in their strategy.

Lack of expertise

Testers need to be highly skilled to check website, browser, and software compatibility among the many other forms that these tests can take. If a testing leader assigns one of their team members to perform compatibility checks and they have insufficient experience, this could slow down the tests and limit their accuracy.

6. No prior discussion

With compatibility tests often being time-consuming (and potentially requiring a wide range of devices), teams must fully establish the scope of their checks early in the quality assurance stage. For example, they must have a clear idea of which specific devices or configurations they intend to test before their checks even begin.

Best Practices for Compatibility Testing

The best ways to ensure high-quality compatibility tests include:

1. Test throughout development

With software changing significantly from one week to the next, this can affect how compatible the program is with its intended devices. Teams must perform software and cross-browser compatibility testing repeatedly to make sure the application still runs well on these platforms after developmental changes.

2. Use real devices

Some compatibility testing tools offer access to ‘real’ simulated devices which are able to closely resemble the user’s experience for that platform. This lets you ensure compatibility across more devices while maintaining a high level of accuracy not present in certain automated solutions.

3. Prioritize the tests

With a limited amount of time to conduct these checks, compatibility testers might need to prioritize the most common devices, browsers, and operating systems. Similarly, the testing team should inspect the most critical features of the software first to guarantee basic functionality on these devices.

4. Integrate agile techniques

Some companies choose to adopt a sprint-based approach for their compatibility tests, allowing them to easily reach testing milestones – such as checking a specific number of devices. Agile encourages cross-departmental communication while also providing a set test structure that can guarantee consistent, rapid improvement.

5. Limit the testing scope

Quality assurance teams must know when to end their tests and even accept an instance of incompatibility. In this instance, the developer might not change the software, and could instead change minimum requirements if this would be too difficult to circumvent through bug fixes.

Examples of Compatibility Test cases and scenarios

Compatibility test cases establish the testing team’s inputs, testing strategy, and expected results; the latter of which they compare with the actual results. As the checks cover many devices and configurations, this is often an extensive process.

These cases usually include:

• Test the web application’s HTML displays properly.

• Check that the software’s JavaScript code is usable.

• See if the application works in different resolutions.

• Test that the program can access the file directory.

• Make sure the app connects to all viable networks.

Here are specific examples of compatibility testing in software testing for different programs:

1. Social networking app

Social networks commonly take the form of web apps on browsers and mobile apps for corresponding devices; both types require equally thorough testing. For example, this mobile app must be fully operational on iOS and Android devices at a minimum – with the team checking old and new devices under each operating system. If a specific model of iPhone cannot render animated GIF files, for example, the team must identify what is causing this to ensure a consistent user experience.

2. Video game

Video games generally offer customizable graphical options that users can change to match their machine; this includes controlling the screen’s resolution and ensuring the UI scales appropriately. Certain issues can emerge depending on the player’s specific hardware – with antialiasing errors leading to grainy graphics. This could be due to a common graphic card that is incompatible with the company’s texture rendering. Depending upon the exact problem, this might even manifest as a system crash when certain devices launch the game.

3. CRM cloud system

Customer relationship management solutions make heavy use of databases to retrieve information about their transactions, vendors, and other important facets of business, mainly with the help of cloud storage. Testers should make sure this database and its cloud services work on different networks, including 3G and 4G if a user needs to access it without internet connectivity. The team must also inspect a wide range of operating systems as certain glitches might only appear on Linux devices, for example.

Manual or automated Compatibility Tests?

Automation could be very helpful for compatibility tests, letting teams check high numbers of devices far more quickly than a manual approach. However, manual testing might be more appropriate when conducting checks on a limited number of browsers and devices – for example, a video game only available on two platforms. The software’s usability is often a core factor in compatibility tests and usually requires a human perspective which can better identify graphical rendering issues. Robotic process automation may help with this by implementing software robots that can more easily mimic a human user’s approach to compatibility tests.

For programs designed for a wide range of devices, such as mobile and web apps, automation allows the team to secure broader test coverage. They could even use hyperautomation to intelligently outsource these checks in a way that still ensures human testers inspect these platforms for user-specific functionality. Compatibility testing in manual testing is still mandatory for some tasks – such as checking the UI displays correctly on every device. This means the best approach could be a blended strategy which can test more devices overall through automation, increasing their pace while still accounting for the significance of usability.

What do you need to start Compatibility Testing?

The major prerequisites for compatibility testing typically include:

1. Qualified testing staff

Compatibility testers generally have higher skill requirements than other forms of quality assurance due to the fact that they check a broader range of devices, and often encounter more errors. This might include problem-solving, communication, and attention to detail. Team leaders should assign testers who have experience with examining the same application on many platforms.

2. Strong device emulation

It can be difficult to source and test every physical device within the team’s scope, making emulation essential for seeing how various platforms respond to the same program. This process is rarely perfect and testers must look at the many emulators and automated testing tools available to see which one offers the most accuracy.

3. Clear testing scope

The team should have an understanding of their scope before the checks begin; especially as this could decide the pace at which they work. While the program may aim to cover many platforms, the testers should identify an appropriate cut-off point. For example, testing operating systems released before Windows 7 might lead to diminishing returns.

4. Time management

Compatibility testing can occur at any point throughout the quality assurance stage, but is commonly saved for the end of development – when the program is stable and feature-complete. However, testers should consider compatibility long before this as it is often time-consuming. Robust planning in advance helps the team ensure they have sufficient time for every check.

Compatibility Testing

checklist, tips & tricks

Here are additional tips that quality assurance teams must keep in mind when enacting compatibility tests:

1. Don’t target absolute coverage

While every testing strategy aims to maximize test coverage, they typically stop before reaching 100% because of diminishing returns with only minor improvements for very few users. In the context of compatibility, teams should understand when too few of their customers would use a device for these checks to be worthwhile.

2. Prioritize cross-browser combinations

Cross-browser compatibility testing involves checking each browser against various operating systems. Testers must use comprehensive analytics about their audience to determine the most popular of both and use this to guide their approach. They might even develop a browser compatibility matrix, which establishes the scope of these checks and their diverse configurations.

3. Verify layout

Ensuring a consistent experience is at the heart of compatibility testing and these checks must go deeper than identifying if the program’s features work on different devices. Teams should also verify the software’s overall layout, including the alignment of any forms or tables, as well as the integrity of the program’s CSS and HTML.

4. Check APIs

Application programming interfaces are a core component of how browsers read apps, making them vital for a team’s cross-browser compatibility testing. Different web browsers have their own API calls, and their updates over time could affect compatibility. Testers must check these regularly; even if the company uses a similar API for each program.

5. Examine the SSL certificate

SSL certificates increase a browser’s security – encrypting web traffic and allowing users to benefit from HTTPS protocols. A website or web app could have a certificate that’s incompatible with certain browsers. This means testers should validate the certificate on all major platforms to make sure users feel safe on their website.

6. Validate video players

Programs that display video, such as streaming services or freemium mobile games supported by ads, should undergo testing to ensure these videos display for all intended devices. For many of the apps, these checks will include both desktop and mobile devices and could look at the video’s quality, speed, and frame rate.

5 best Compatibility Testing tools & software

The most effective free and paid tools for testing compatibility include:

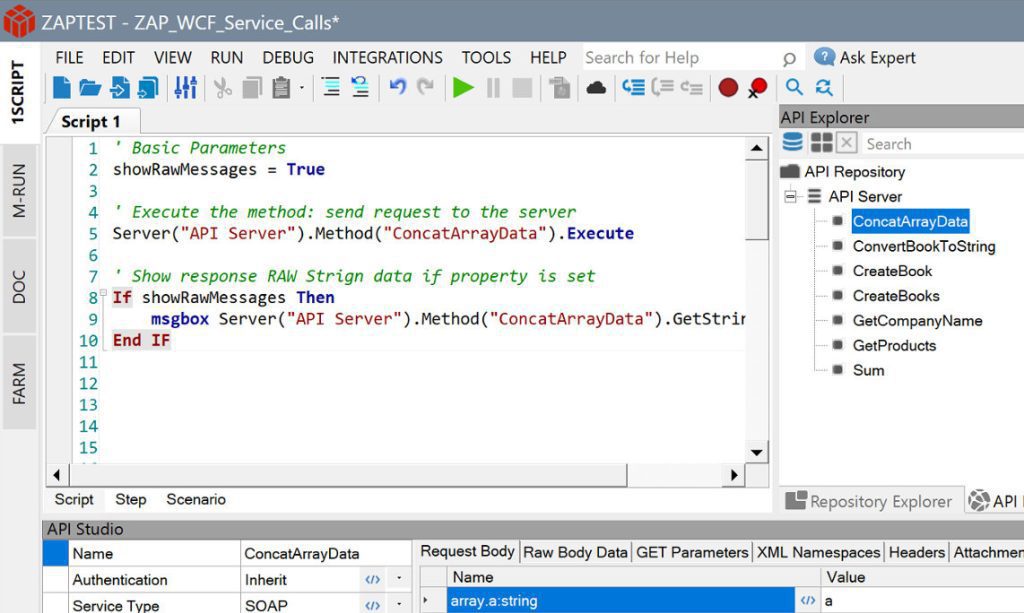

1. ZAPTEST Free & Enterprise Edition

ZAPTEST offers excellent functionality in both its Free and Enterprise (paid) editions, helping companies of any size (or budget) with their compatibility checks. Companies that choose ZAPTEST’s Enterprise version can even enjoy a return that’s up to 10x their original investments. The solution’s 1SCRIPT feature is specifically attuned to the needs of compatibility testers, allowing them to run the exact same tests on multiple platforms without modifying the code to match. Add state-of-the-art RPA functionality at no extra cost, and you have a one-stop Task-Agnostic Automation solution.

2. LambdaTest

LambdaTest uses a cloud-based approach to deliver 3,000 automated devices – though with a significant focus on web browsers, which might limit this solution’s effectiveness for certain programs. The platform specializes in continuous testing, integrating the quality assurance process more closely with development. The checks on this application also let users set their resolution, making cross-browser compatibility testing much easier. This solution offers a freemium model, though this includes limited tests without upgrading and no real devices.

3. BrowserStack

Similar to LambdaTest, BrowserStack provides access to 3,000 real devices; their catalog also includes legacy and beta options for browsers. While people are more likely to upgrade their browser than their OS, there may still be many people using older versions – BrowserStack accommodates this. Users can also enact geolocation testing to see how websites and web apps look in different countries. However, there are no free or freemium options, and real device testing can be slow.

4. TestGrid

TestGrid allows for parallel testing, letting teams check several combinations at the same time to speed up the process. This solution also integrates well with the testing and development workflow – possibly facilitating an agile approach by forming a key part of the department’s sprints. However, TestGrid sometimes struggles with connecting to cloud devices and browsers. On top of this, the program is quite limited in terms of load testing, documentation, and adding new devices to the company’s setup.

5. Browsera

Browsera mainly focuses on testing websites to ensure they display properly on various devices, browsers, and operating systems. As a cloud-based approach, quality assurance teams don’t need to install this virtual testing lab on their devices. Browsera can also compare outputs in order to intelligently spot layout issues and JavaScript errors that even a human tester might miss. However, Browsera has no support for several common browsers, including Opera, and offers only basic test functionality for free.

Conclusion

Compatibility testing is critical for a successful quality assurance strategy, allowing teams to validate their apps on a wide range of devices. Without embracing this technique, companies may be unaware that their software will not work for much of their target audience until after launch. This costs a lot of time and money compared to pre-release testing and applications such as ZAPTEST can streamline this process even further. With 1SCRIPT and many other features available for free such as parallel testing, choosing ZAPTEST as your testing tool could transform any project while giving teams total confidence in their application.