System testing is a type of software testing that performs checks on the system as a whole.

It involves integrating all the individual modules and components of the software you developed, to test whether the system works together as expected.

System testing is an essential software testing step that will further enable testing teams to verify the quality of the build, before it’s released to end-users.

In this article, we’ll explore system testing: what it is, how it works, who carries system testing out and what approaches and tools testing teams can take to make system testing faster and more reliable.

In short, you’ll find everything you need to know about system testing here.

What is system testing?

System testing is a type of software testing that is always conducted on an entire system. It checks whether the system complies with its requirements, whatever they are.

Testers carry out system testing to evaluate both the functional and non-functional requirements of the system after individual modules and components have been integrated together.

System testing is a category of Black Box testing, which means that it only tests external working features of the software, as opposed to testing the internal design of the application.

Testers don’t require any knowledge of the programming and structure of the software code to fully evaluate a software build during system testing. Instead, testers are simply assessing the performance of the software from the perspective of a user.

1. When do we need to do system testing?

System testing is carried out after integration testing and before acceptance testing. System testing is carried out by the software testing team on a regular basis to ensure that the system is running as it should at key stages during development.

Some examples of occasions when system testing is carried out are:

● During the development of new software versions.

● During the application launch when alpha and beta testing takes place.

● After unit and integration testing is finished.

● When the requirements of the system build are complete.

● When other testing conditions are met.

Like other forms of software testing, it’s recommended to carry out system testing regularly to ensure that the software is running as it should.

The frequency with which system testing can be carried out depends on the resources of your team and the approaches and tools that you use to carry out system software testing.

2. When you don’t need system tests

If you haven’t carried out preliminary tests such as smoke tests, unit tests, and integration tests yet, then you’re not ready to start system testing.

It’s always important to conduct system testing after integration testing is complete, but if you run into bugs and issues which cause the system test to fail, you can stop system testing and return to development and bug fixing before proceeding further.

3. Who is involved in system testing?

System testing is carried out by testers and QA teams, and not developers. System testing only considers the external elements of software, or in other words, the experience of users trying to access the software’s features.

This means that testers who carry out system testing don’t require any technical knowledge of computer coding, programming, and other aspects of software development that might require input from developers.

The only exception to this is in the case of automated system testing, which could require some input from developers depending on how you approach this.

What do we test in system testing?

System testing is a type of software testing that is used to test both functional and non-functional aspects of the software.

It can be used to test a huge variety of functionalities and features, many of which are covered in more depth under types of system testing.

Some of the software aspects that system testing verifies are detailed below.

1. Functionality

Testers use system testing to verify whether different aspects of the completed system function as they should.

Prior testing can be used to assess the structure and logic of the internal code and how different modules integrate together, but system testing is the first step that tests software functionality as a whole in this way.

2. Integration

System testing tests how different software components work together and whether they integrate smoothly with one another.

Testers may also test external peripherals to assess how these interact with the software and if they function properly.

3. Expected output

Testers use the software as a user would during system testing to verify the output of the software during regular use. They check whether the output for each functional and non-functional feature of the software is as expected.

If the software doesn’t behave as it should, the obvious conclusion is that it requires further development work.

4. Bugs and errors

System testing is used to assess the functionality and reliability of software across multiple platforms and operating systems.

System testers verify that software is free of bugs, performance issues, and compatibility problems across all of the platforms that the software is expected to run on.

Entry and exit criteria

Entry and exit criteria are used in system tests to ascertain whether or not the system is ready for system testing and whether or not the system testing requirements have been met.

In other words, entry and exit criteria help testers to evaluate when to start system testing and when to finish system testing.

Entry criteria

Entry criteria establish when testers should begin system testing.

Entry criteria can differ between projects depending upon the purpose of testing and the testing strategy being followed.

Entry criteria specify the conditions that must be met before system testing begins.

1. Testing stage

In most cases, it’s important that the system being tested has already finished integration testing and met the exit requirements for integration testing before system testing begins.

Integration testing should not have identified major bugs or issues with the integration of components.

2. Plans and scripts

Before system testing can start, the test plan should have been written, signed off, and approved.

You will also need to have test cases prepared in advance, as well as testing scripts ready for execution.

3. Readiness

Check that the testing environment is ready and that all non-functional requirements of the test are available.

Readiness criteria may differ in different projects.

Exit criteria

Exit criteria determine the end stage of system testing and establish the requirements that must be met for system testing to be considered finished.

Exit criteria are often presented as a single document that simply identifies the deliverables of this testing phase.

1. Execution

The most fundamental exit criteria for completing system testing is that all test cases outlined in the system testing plans and entry criteria have been executed properly.

2. Bugs

Before exiting system testing, check that no critical or priority bugs are in an open state.

Medium and low-priority bugs can be left in an open state provided they are implemented with the acceptance of the customer or end user.

3. Reporting

Before system testing ends, an exit report should be submitted. This report records the results of the system tests and demonstrates that testing has met the exit criteria required.

System testing life cycle

The system testing life cycle describes each phase of system testing from the planning stages through to reporting and completion.

Understanding each stage of the system testing life cycle will help you understand how to carry out system testing, and how it works.

Stage 1: Create a test plan

The first stage of system testing is creating a system test plan.

The purpose of a test plan is to outline the expectations of the test cases as well as the test strategy.

The test plan usually defines testing goals and objectives, scope, areas, deliverables, schedule, entry and exit criteria, testing environment, and the roles and responsibilities of those people involved in software system testing.

Stage 2: Create test cases

The next stage of system testing is creating test cases.

Test cases define the precise functions, features, and metrics that you’re going to test during system testing. For example, you might test how a particular function works or how long a specific loading time is.

For each test case, specify a test case ID and name alongside information about how to test this scenario and what the expected result of the test case is.

You can also outline the pass/fail criteria for each test case here.

Stage 3: Create test data

Once you have created test cases, you can create the test data that you will require to perform the tests.

Test data describes the inputs that the testing team will need to test whether their actions result in the expected outcomes.

Stage 4: Execute test cases

This stage is what most people may think of when they think of system testing: the execution of the test cases or the actual testing itself.

The testing team will execute each test case individually while monitoring the results of each test and recording any bugs or faults that they encounter.

Stage 5: Report and fix bugs

After executing the test cases, testers write up a system test report that details all the issues and bugs that arose during testing.

Some of the bugs that the test reveals might be small and easily fixable, whereas others could set the build back. Fix these bugs as they arise and repeat the test cycle (which includes other types of software testing like smoke testing) again until it passes with no major bugs.

Clearing up the confusion:

System testing vs integration testing vs user acceptance testing

Many people confuse system testing with other types of software testing like integration testing and user acceptance testing (UAT).

While system testing, integration testing, and user acceptance testing do share some characteristics, they’re different types of testing that serve different purposes and each type of testing must be carried out independently from the others.

What is integration testing?

Integration testing is a type of software testing where software modules and components are tested as a group to assess how well they integrate together.

Integration testing is the first type of software testing that is used to test individual modules working together.

Integration testing is carried out by testers in a QA environment, and it’s essential because it exposes defects that may arise when individually coded components interact together.

What are the differences between system testing and integration testing?

While both system testing and integration testing test the software build as a whole, they are different types of software testing that work distinctly.

Integration testing happens first, and system testing happens after integration testing is complete. Other major differences between system testing and integration testing are:

1. Purpose:

The purpose of integration testing is to assess whether individual modules work together properly when integrated. The purpose of system testing is to test how the system works as a whole.

2. Type:

Integration testing purely tests functionality, and it’s not a type of acceptance testing.

By contrast, system testing tests both functional and non-functional features, and it falls under the category of acceptance testing (but not user acceptance testing).

3. Technique:

Integration testing uses both black box and white box testing to assess the software build from the perspective of both a user and a developer, whereas system testing uses purely black box testing methods to test software from the user’s perspective.

4. Value:

Integration testing is used to identify interface errors, while system testing is used to identify system errors.

What is user acceptance testing?

User acceptance testing, or UAT, is a type of software testing that’s carried out by the end-user or the customer to verify whether the software meets the desired requirements.

User acceptance testing is the last form of testing to take place before the software moves into the production environment.

It occurs after functional testing, integration testing, and system testing have already been completed.

What are the differences between system testing and user acceptance testing?

User acceptance testing and integration testing both validate whether a software build is working as it should, and both types of testing focus on how the software works as a whole.

However, there are lots of differences between system testing and user acceptance testing:

1. Testers:

While system testing is carried out by testers (and sometimes developers), user acceptance testing is carried out by end users.

2. Purpose:

The purpose of user acceptance testing is to assess whether a software build meets the end user’s requirements, and the purpose of system testing is to test whether the system meets the tester’s requirements.

3. Method:

During system testing, individual units of the software build are integrated and tested as a whole. During user acceptance testing, the system is tested as a whole by the end user.

4. Stage:

System testing is performed as soon as integration testing has been completed and before user acceptance testing takes place. User acceptance testing takes place just before the product is released too early adopters.

Types of system testing

There are over 50 different types of system testing that you can adopt if you want to test how your software build works in its entirety.

However, in practice, only a few of these types of system testing are actually used by most testing teams.

The type of system testing that you use depends on lots of different factors, including your budget, time constraints, priorities, and resources.

1. Functionality testing

Functionality testing is a type of system testing that’s designed to check the individual features and functions of the software and assess whether they work as they should.

This type of system testing can be carried out manually or automatically, and it’s one of the core types of system testing that testing teams carry out.

2. Performance testing

Performance testing is a type of system testing that involves testing how well the application performs during regular use.

It’s also called compliance testing, and it usually means testing the performance of an application when multiple users are using it at once.

In performance testing, testers will look at loading times as well as bugs and other issues.

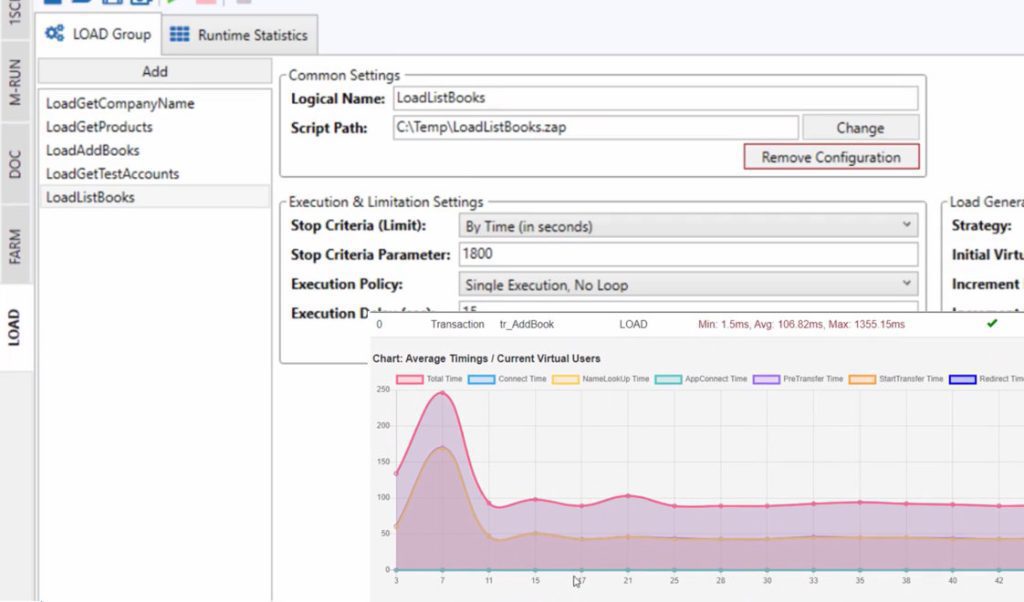

3. Load testing

Load testing is a type of system testing that testers conduct to assess how well an application handles heavy loads.

For example, testers might test how well the application runs when lots and lots of users are trying to carry out the same task at the same time, or how well the application carries out multiple tasks at once.

4. Scalability testing

Scalability testing is a type of software system test that tests how well the software scales to meet the needs of different projects and teams.

This is a type of non-functional testing that involves assessing how the software performs for different numbers of users or when used in different locations and with different resources.

5. Usability testing

Usability testing is a type of system testing that involves testing how usable the application is.

This means that testers assess and evaluate how easy the application is to navigate and use, how intuitive its functions are, and whether there are any bugs or issues that may cause usability issues.

6. Reliability testing

Reliability testing is a type of system integration testing that checks how reliable the software is.

It requires testing the software functions and performance within a controlled setting to assess whether the results of one-time tests are reliable and replicable.

7. Configuration testing

Configuration testing is a type of system testing that assesses how well the system performs when working alongside various types of software and hardware.

The purpose of configuration testing is to identify the best configuration of software and hardware to maximize the performance of the system as a whole.

8. Security testing

Security testing is a type of system testing that evaluates how the software performs in relation to security and confidentiality.

The purpose of security testing is to identify any potential vulnerabilities and hazards that could be the source of data breaches and violations that could result in the loss of money, confidential data, and other important assets.

9. Migration testing

Migration testing is a type of system testing that’s carried out on software systems to assess how they might interact with older or newer infrastructures.

For example, testers might assess whether older software elements can migrate to a new infrastructure without bugs and errors arising.

What you need to start running system testing

Before system testing can begin, it is important you have a clear plan of bringing together the resources and tools needed for a successful and smooth system testing process.

It’s a relatively involved process whether you’re testing manually, automatically, or using both approaches, so knowing what you’ll need before you get started is the best way to reduce the risk of delays and disruptions during testing.

1. A stable build that’s almost ready to launch

System testing is one of the last stages of software testing that happens before release: the only type of testing that occurs after system testing is user acceptance testing.

It’s important that, before you start system testing, you’ve already conducted other types of software testing including functional testing, regression testing, and integration testing, and that your software build has met the exit criteria for each of these types of software tests.

2. System testing plans

Before you start testing, write up formal documentation that outlines the purpose and objectives of the tests you’re going to carry out and defines the entry and exit criteria of system testing.

You might use this plan to outline individual test scenarios that you’re going to test or to define your expectations for how the system will perform.

The system testing plan should make it easy for testers to design and conduct system testing by following the plan.

3. Test cases

It’s important to outline the test cases that you’re going to test during system testing before system testing begins.

Test cases may not be exhaustive, but they should be complete enough to test the most important functional and non-functional features of the system and to give an accurate overview of the system’s workings as a whole.

4. Skills and time

Make sure that you allocate sufficient resources to system testing before your system tests begin.

System testing can take a relatively long time, especially when compared to other types of testing like smoke testing.

You’ll need to identify which people in your team are going to conduct the testing and how long they’ll need to block out before testing begins.

5. System testing tools

System testing can be carried out manually or it can be automated, but regardless of which approach you take to testing, it’s possible to streamline and optimize your system testing workflows by adopting tools and technology that help with different aspects of testing.

For example, you might use AI tools to automate some of your system tests, or you might use document management software to help track the progress and results of your testing.

The system testing process

Before you start, it’s important to understand the system testing process and how to carry out each of its steps.

This step-by-step plan follows the system testing life cycle detailed earlier but goes into further detail to outline the individual steps involved in system testing.

Step 1: Create a system testing plan

Create your system testing plan before you start system testing. Each system testing plan will be different, but your plan should include at least an outline of the purpose of testing as well as the relevant entry and exit criteria that determine when testing should begin and when testing is finished.

Step 2: Generate test scenarios and test cases

The next stage is generating test scenarios and test cases that outline exactly what you’re going to test and how you’re going to test it.

Include real-life test scenarios that test how the software works under typical use, and for each test case that you write up include details on the pass and fail criteria of the test and what the expected outcome is.

Step 3: Create the required test data

Create the required test data for each test scenario that you’re planning to execute.

The test data that you’ll need for each test scenario you plan to run, is any test data that affects or is affected by each particular test.

It’s possible to manually generate test data or you can automate this stage if you want to save time and have the resources to do so.

Step 4: Set up the testing environment

The next step is setting up the testing environment ready to run your system tests. You will get better results from your system testing if you set up a production-like testing environment.

Make sure that your testing environment includes all the software and hardware that you want to test during configuration and integration testing.

Step 5: Execute the test cases

Once you have set up the testing environment, you can execute the test cases that you created in the second step.

You can either execute these test cases manually, or you can automate the test case execution using a script.

As you carry out each test case, make a note of the results of the test.

Step 6: Prepare bug reports

Once you’ve executed all the test cases outlined, you can use the results of each test to write up bug reports highlighting in detail all of the bugs and defects that you identified during the system tests.

Pass this report on to the developers for bug repairs and fixes. The bug repairs stage can take some time, depending on the complexity and severity of the bugs that you identify.

Step 7: Re-test after bug repairs

Once the software developers have sent back the software for further testing after fixing bugs, it’s important to re-test the software build again.

Crucially, system testing should not be considered complete until this step has been passed with no bugs or defects showing.

It’s not enough to assume that all bugs have been fixed and that the build is now ready to move to user acceptance testing.

Step 8: Repeat the cycle

The final step is simply to repeat this cycle as many times as you need to pass step seven without identifying any bugs or defects.

Once the system test passes and you have met all the exit criteria outlined in the system testing plan, it’s time to move on to user acceptance testing and, eventually, the release of the product.

Manual vs automated system tests

Like other types of software testing, system testing can be either carried out manually by human testers or at least partially automated by software. Software testing automation streamlines the testing process and saves time and money, but sometimes it’s important to carry out manual system testing as well.

There are pros and cons to both manual and automated system testing, and it’s important to understand these before deciding on which type of system testing you want to undertake.

Manual system testing

Manual system testing means carrying out system testing manually, without automating part of all the testing process.

Manual system testing takes longer to carry out than automated testing, but it also means that the testing process benefits from human insight and judgement.

Manual testing is often combined with automated testing to maximize the efficacy and accuracy of system testing and other types of software tests.

1. The benefits of performing manual system testing

There are many benefits to performing manual system testing, and these benefits explain why many testing teams opt to continue with manual testing as well as automated testing even after automating test scripts.

Complexity

Manual testing is suitable for testing complex test scenarios that are not always easy to automate.

If the requirements of your system testing are complicated or detailed, you may find it easier to test these scenarios manually than to write automated test scripts for them.

Exploratory testing

When you automate any kind of software test, the test follows its script and only tests exactly those features that you’ve programmed the test to assess.

By contrast, when you carry out manual testing, you can choose to explore different features as and when they pique your interest, for example, if you notice something that doesn’t look the way it should in the software interface.

Simplicity

Once you’ve written your automated test scripts, automated testing is easy. But it usually requires development expertise to write test scripts in the first place, and smaller testing teams may not have the resources to make this happen.

Manual testing requires no technical expertise or knowledge of coding.

2. The challenges of manual system tests

Manual testing also brings its own challenges. Software testing teams that only carry out manual system testing without incorporating elements of automated testing may find themselves disadvantaged compared to those teams that use both approaches.

Time-consuming

As you might expect, carrying out manual system testing is more time-consuming than automated system testing. This is particularly a weakness when agile testing is required.

This means that it’s less practical to carry out regular or very thorough system tests, and this in turn could affect the reliability and scope of the results.

Human error

When humans carry out manual testing, there’s always room for human error. Humans make mistakes and get bored or distracted, and this is particularly likely when carrying out repetitive, time-consuming tests that may be more likely to tire out testers.

Test coverage

Manual tests don’t offer the same breadth of coverage that automated tests do.

Because testers have to carry out manual tests themselves, it’s impossible to cover as much ground when testing manually when compared to automated testing, and this could lead to less comprehensive test results.

When to use manual software testing

Manual software testing has not been replaced by automated testing, and manual testing is still an important phase of the system testing process.

Manual testing is suitable for smaller software teams that may not have the resources to automate system testing independently, and even teams that have adopted automated testing should use manual testing to assess more complex test scenarios or test cases where exploratory testing offers value.

System testing automation

It’s possible to automate system testing either by writing test scripts yourself or by using hyperautomation tools and processes to partially or fully automate the system testing process.

Most often, automated system testing is combined with manual system testing to provide the best balance of coverage, efficiency, and accuracy.

1. The benefits of system testing automation

Automated system testing is growing in popularity in part because of the wide availability of automated testing tools that make it easy to automate software system testing.

There are lots of benefits to automated system testing, especially when combined with manual testing.

Efficiency

Automated testing is more efficient than manual testing because it’s possible to run automated tests in the background while testers and developers carry out other tasks.

This makes it more practical to carry out automated testing on a more regular basis and reduces the need to delegate a large number of resources to test after the automated tests have been set up.

Greater test coverage

Automated tests can often cover a greater area of the software build than manual tests can, in large part because of their increased efficiency.

When testers carry out system testing manually, they must pick and choose the most important test cases to assess, whereas automated testing gives software teams the flexibility to test more scenarios in less time.

Remove human error

Automated tests are not vulnerable to human errors in the same way that manual tests are.

When conducting repetitive, time-consuming tests that can tire out manual testers, automated tests continue to test software at the same rate and accuracy level.

Humans are also more likely to focus on finding easy bugs than difficult bugs, which can cause some important but less obvious bugs to be missed.

Standardize testing

When you write a script to automate system testing, you’re creating a set of instructions for your software testing tool to follow.

This effectively standardizes the software tests that you run and ensures that each time you run a test, you’re running the same test and testing software to the same standards.

2. The challenges of system testing automation

Automated system testing isn’t perfect, which is why it’s often conducted alongside manual testing for the best results. It’s more efficient than manual testing but may not offer quite as much in terms of depth or qualitative data.

Flexibility

Because automated testing always follows a script, there is no flexibility to test mechanisms or features outside of those written into the testing script.

While this results in consistency, it does mean that bugs and errors can be missed if they haven’t been considered during the planning stages.

Resources

Automated tests take time and resources to set up.

While it’s possible to automate system testing using off-the-shelf software and tools, most of the time these still require tweaking to your software requirements.

Traditionally, automated testing has meant dedicating technical resources to write and run automated tests properly, though more and more tools like ZAPTEST provide advanced computer vision software automation in a codeless interface.

Complex test cases

In most cases, it’s not possible to automate system testing 100% without relying on any manual testing at all.

This is particularly true when you need to test complex test scenarios that most automation tools are not up to testing.

3. When to implement automated system testing

If your testing team has the resources to implement automated testing, either by writing custom test scripts or using automation tools to write them, automated testing can make system testing both more efficient and more reliable.

However, it’s always important to continue testing manually even when you’re confident in the quality and coverage of your automated tests because automated testing can’t replicate the depth and insight that only manual testing can offer.

Conclusion: Automated system testing vs manual system testing

Automated system testing and manual system testing are both important during the testing phase of software development.

While smaller companies may start off with only manual system testing because of the additional investment or resources that automated testing requires, most testing teams adopt a combined approach that involves automated testing as soon as they’re practically able to.

By combining automated testing with manual testing, testing teams can maximize efficiency, accuracy, and flexibility without compromising on any of the outcomes of system testing.

Best practices for system testing

If you want to optimize your system testing workflows for maximum efficiency and accuracy, following system testing best practices is the best way to do this.

Best practices can help you to ensure that you don’t miss anything during the system testing stage and ensures that your system tests are always of a consistently high standard.

1. Plan system tests adequately

All systems tests should begin with a formal testing plan that clearly outlines the test cases and approaches that will be used during testing.

Starting with a formal plan reduces the risk of delays occurring during testing and prevents disruptions that can arise from ambiguities.

It ensures that all relevant parties know what their role is and what they are responsible for.

2. Always write up detailed, accurate reports

It’s important that system testing is always well documented, or testers and software developers may not find it easy to act upon the results of your tests.

Write up clear, thorough reports for every test you carry out that detail any bugs that you find, show exactly how to replicate them, and identify how the software should behave once fixed.

Make sure that your bug reports are unambiguous and easy to follow.

3. Test on real devices

Often, testing teams choose to replicate different devices within the testing environment, without actually testing software on different devices.

If you’re building software to be used on different platforms like mobiles, i.e. Android, iOS etc tablets, web, and desktops i.e. Windows, Linux, etc, make sure that you test them on these devices to assess how they perform with different loads or whether network connection issues could cause problems on particular platforms.

4. Automate testing where possible

It’s usually best to combine manual system testing with automated system testing for the best results.

If you haven’t experimented with automated system integration testing yet, trying out RPA + Software Testing tools that can help you to automate at least some of your system tests will allow you to increase your coverage and efficiency without compromising the accuracy of your results.

5. Test one feature per case

When you write up test cases, focus on testing just one feature per case where possible.

This makes it easier to reuse these test cases in future tests and it allows developers to understand more clearly how bugs arise and which features they’re triggered by.

Types of outputs from system tests

When you run system tests, it’s important to know what type of outputs to expect from your tests and how to use these outputs to inform future development and testing.

Test outputs are effectively the assets and information that you obtain by carrying out the system tests.

1. Test results

Your test results include data about how the software performed in each test case that you carried out, alongside a comparison of how you expected the software to perform.

These results help to determine whether each test case passes or fails because if the software performed in a way you didn’t expect it to, this usually means that it has failed.

2. Defects log

Defect logs are logs of all the bugs and defects that were found during system testing.

A defect log lists all the bugs found, alongside other important information such as the priority of each bug, the severity of each bug, and the symptoms and description of the bug.

You should also note down the date the bug was detected and other information that will help developers to replicate the bug again.

3. Test report

The test report is usually part of the exit criteria for finishing system testing, and it usually includes a summary of the testing carried out, GO/No-Go recommendations, phase and iteration information, and the date of testing.

You might also include any other important information about the test results or attach a copy of the defect list to this report.

Examples of system tests

System tests are designed to test the system as a whole, which means that they test all of the different software units working together as a system.

Examples of system tests can help you to understand better what a system test is and what it tests.

1. Testing functionality

A team of software engineers is putting together a new shopping app that helps grocery stores to pick and pack online orders more efficiently.

The app is composed of multiple different modules, each of which has already been tested independently in unit testing and tested alongside other modules in integration testing.

System testing is the first time all of the modules are tested in unison, and testers design test cases to assess each individual function of the application and check whether they function as expected once all of the modules are running together.

2. Testing load times

A team of software testers is testing how quickly an application loads at various points under different levels of stress.

They create test cases that describe what type of stress the application is put under (for example, how many users are using it simultaneously) and what functions and features the user is trying to load.

During system testing, load times are logged into the testing report and load times that are deemed too slow will trigger another phase of development.

3. Testing configuration

When building a video game that can be used with lots of different peripherals, including a computer mouse, a VR headset, and a gaming pad, software testers undertake configuration testing to test how well each of these peripherals works with the game.

They work through each test scenario testing each peripheral individually and together, noting down how each peripheral performs at different points in the game and whether the performance is even worse than expected.

Types of errors and bugs detected through system testing

When you carry out system testing, the tests that you perform will allow you to identify errors and bugs within the software that haven’t been found in unit testing and integration testing.

It is possible to identify bugs of many kinds during system testing, sometimes because they’ve been missed previously or usually because they only arise when the system is functioning as a whole.

1. Performance errors

System testing can highlight performance errors in the speed, consistency and response times of a software build.

Testers might assess how the software performs while carrying out different tasks and make a note of any errors or delays that occur during use. These are performance defects, which may or may not be considered severe enough to require further development.

2. Security errors

It’s possible to identify security errors during system testing that highlight vulnerabilities within the system’s security layer.

Security testing takes place during the system testing phase, and it can be used to identify encryption errors, logical errors, and XSS vulnerabilities within the software.

3. Usability errors

Usability errors are errors that make it difficult to use the app in the way it’s intended. They may cause inconvenience to users, which in turn can cause users to abandon the app.

Some examples of usability errors include a complex navigation system or a layout that isn’t easy to navigate across all aspects of the platform.

Using usability tools, errors may be identified earlier in the testing process, but they can also show up during system testing.

4. Communication errors

Communication errors occur when part of the software tries to communicate with another module and an error causes this communication to fail.

For example, if the software prompts the user to download a new update but, when the user clicks on the update download button, the update cannot be found, this is a communication error.

5. Error handling errors

Errors sometimes do occur even when software is working as it should. Perhaps because a component hasn’t been installed properly or because the user isn’t operating it correctly.

However, the system must be able to handle these errors correctly in a way that helps users to identify and fix the problem.

If error messages don’t contain adequate information about the error occurring, users will not be able to fix the error.

Common metrics in system testing

When you carry out system testing, you might track certain testing metrics to help your testing team to monitor how effective system testing is, how commonly bugs are found, and whether system testing is occurring at the right stage of the testing cycle.

For example, if you track the number of tests that pass and the number that fail and find that a high proportion of system tests fail, you may conclude that more thorough testing is needed earlier in the testing cycle to identify bugs and errors before system testing begins.

1. Absolute metrics

Absolute numbers are those metrics that simply give you an absolute number instead of a proportion or ratio.

Absolute metrics can be useful, but because they’re absolute numbers it’s not always easy to interpret what they mean.

Some examples of absolute metrics include system test duration, the length of time it takes to run a system test, and the total number of defects found during system testing.

2. Test efficiency metrics

Test efficiency metrics help testing teams understand how efficient their current system testing procedures are, although they don’t provide any information about the quality of system tests.

Some examples of test efficiency metrics include tests passed percentage and defects fixed percentage.

Tests passed can tell you whether you’re passing too many tests and therefore missing bugs, especially if you see a high test passed metric alongside a high defect escape ratio.

3. Test effectiveness metrics

Test effectiveness metrics tell testers something about the quality of the system tests they’re performing.

They measure how effective the system tests are in identifying and evaluating bugs and defects within the system.

Total defect containment efficiency is an example of a test effectiveness metric that displays the ratio of bugs found during the testing stage when compared to bugs found after release.

4. Test coverage metrics

Test coverage metrics help testers to understand how complete their coverage is across the entire system that they’re trying to test.

For example, you might measure what percentage of your system tests are automated or how many of the required tests have been executed so far.

A requirement coverage metric also helps testers track what proportion of the required features have been covered by testing.

5. Defect metrics

Defect metrics are metrics that measure the presence of defects in different ways. Some defect metrics might focus on the severity of defects while others might focus on the type or root cause of the defects.

One example of a common defect metric is defect density, which measures the total number of defects over the whole release.

Defect density is usually presented as the number of defects per 1000 lines of code.

System test cases

System test cases are the test scenarios that are used in system testing to test how the software functions and whether it meets the expectations of developers, testers, users, and stakeholders.

1. What are test cases in system testing?

Test cases are essentially instructions that define what is to be tested and what steps the tester must carry out to test each individual case.

When you’re writing test cases for system tests, it’s important to include all the information that testers need to execute each test. Include a test case ID for each test case and information about how to execute the test and what results you expect, as well as the pass and fail criteria for each test case where relevant.

2. How to write system test cases

If you’re new to writing test cases, you can follow the steps below to write test cases for system testing. Writing test cases for other types of software testing is a very similar process.

- Define the area that you want your test case to cover.

- Make sure that the test case is easy to test.

- Apply relevant test designs to each test case.

- Assign each test case a unique test case ID.

- Include a clear description of how to run each test case.

- Add preconditions and postconditions for each test case.

- Specify the result that you expect from each test case.

- Outline the testing techniques that should be used.

- Ask a colleague to peer-review each test case before moving on.

3. Examples of system test cases

Using example test cases may help you to write your own test cases. Below are two examples of system test cases that testers might use to test the function of an application or software.

Grocery scanning app price validation

Test ID: 0788

Test case: Validate item price

Test case description: Scan an item and verify its price.

Expected results: The scanned price should align with the current stock price.

Result: The item scanned at $1, which aligns with the current stock price.

Pass/fail: Pass.

Management software end-to-end transaction response time

Test ID: 0321

Test case: Home screen load times

Test case description: Ensure that the app loading screen loads within a good time.

Expected results: Screen should load within four seconds or less.

Result: The screen loaded within 6 seconds.

Pass/fail: Fail.

Best system testing tools

Using system testing tools is one of the simplest ways to streamline the process of testing and reduce the amount of time that testing teams spend on time-consuming manual tasks.

System testing tools can either automate elements of the system testing process for you, or they might make writing test cases and tracking testing progress easier.

Five best free system testing tools

If you’re not ready to spend a hefty chunk of your budget on system testing tools but you want to explore what’s out there and maybe improve the efficiency of your system testing processes at the same time, the good news is that there are lots of free testing tools available online.

Free testing tools don’t offer all the same functionality as paid testing tools, but they can provide smaller businesses with a cost-effective way to explore software automation and RPA.

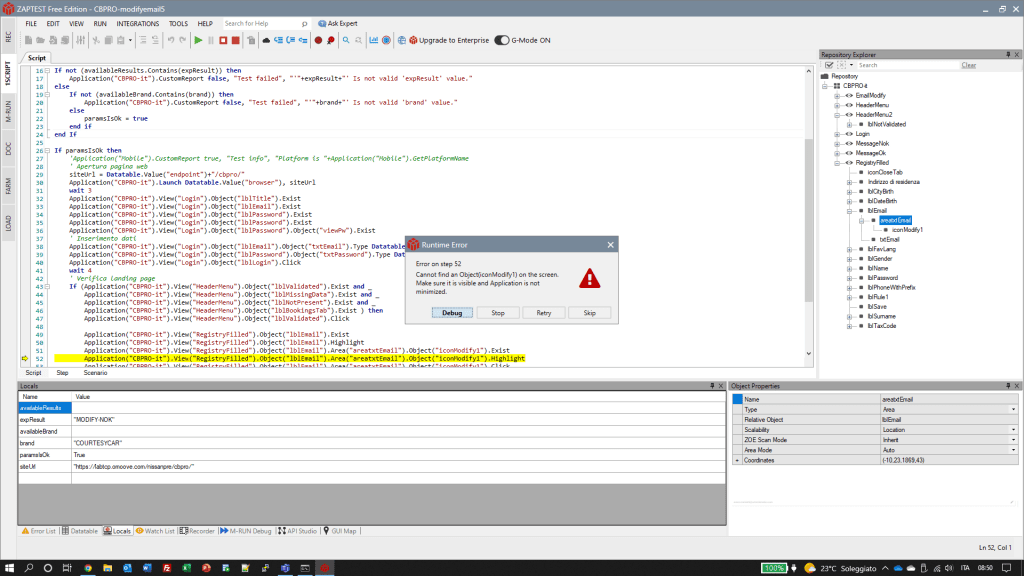

1. ZAPTEST FREE Edition

ZAPTEST is a suite of software testing tools that can be used for system testing and other types of software testing.

ZAPTEST is available as both a free and a paid enterprise edition, but the free edition is the perfect introduction to automated system testing for smaller companies and businesses wanting to take the first steps toward testing automation.

ZAPTEST can automate system tests for both desktop and handheld devices and allows testers to automate tests without coding.

2. Selenium

Selenium is one of the most well-known open-source testing tools available on the market.

The free version of Selenium offers automation testing tools that can be used in system testing, regression testing, and bug reproduction and you can use it to create your own test scripts for lots of different test scenarios.

It does however come at the cost of simplicity and ease of use and can be quite difficult to learn for non-technical users.

3. Appium

Appium is a free system testing tool that’s suitable for use specifically with mobile applications.

You can use Appium to automate system testing for apps designed for use with iOS and Android smartphones and tablets.

This free tool isn’t suitable for use with desktop applications, which is one of its biggest weaknesses.

3. Testlink

If you just want to make it easier to plan, prepare, and document system testing, Testlink is a great free tool that makes test documentation management simple.

Using Testlink, you can easily sort reports into sections to find the information that you need when you need it.

Testlink is a valuable testing tool whether you’re conducting system testing, smoke testing, or any other kind of software testing.

5. Loadium

Loadium is a free testing tool that is specifically designed for performance testing and load testing.

Its focus on performance and load testing though represents a significant weakness, for users looking to automate an entire spectrum of end -to-end tests.

4 best enterprise system testing tools

As your business grows you might find that free testing tools no longer suit your requirements. A lot of free tools like ZAPTEST offer enterprise versions as well as free versions.

1. ZAPTEST Enterprise edition

ZAPTEST offers an enterprise version of their testing tool that boasts the same easy-to-use features and intuitive interface of the free tool but scales better for larger teams who may require more intensive testing or who want to test more complex software builds.

The enterprise version of ZAPTEST offers unlimited performance testing and unlimited iterations as well as an assigned ZAP certified expert on call for support, working as part of the client team (this in itself represents a significant advantage when compared with any other automation tools available).

Its Unlimited Licenses model is also a leading proposition in the market, ensuring that businesses will have fixed costs at all times, regardless of how fast they grow.

2. SoapUI

SoapUI is a testing tool that makes it possible to manage and execute system tests on various web service platforms and APIs.

Testing teams can use SoapUI to minimize the amount of time they spend on time-consuming tasks and to develop more thorough and efficient testing strategies.

3. Testsigma

Testsigma is a software testing platform that works off the shelf. It allows product teams to plan and execute software tests automatically on websites, mobile apps, and APIs.

The platform is built with Java but it works with test scripts written in simple English.

4. TestingBot

TestingBot is a relatively low-cost enterprise solution for businesses that want to experiment in this sector without spending a lot of money from the start. TestingBot offers testers a simple way to test both websites and mobile apps using a grid of 3200 browser and mobile device combinations.

It lacks the functionality of larger Enterprise tools, but it is a good option for companies with lower budgets.

When you should use enterprise vs free system test tools

Whether you choose to use enterprise or free system testing tools depends on the needs of your team, your budget, your priorities, and your work schedule.

It goes without saying that enterprise tools offer more features and functionality when compared to free tools, but for smaller companies without much space in the budget, free tools are a fantastic option.

If your business is growing or if you’re finding that your testing team is spending more time than you’d like on system testing and other types of software testing, upgrading to enterprise testing tools and learning how to take full advantage of these tools could help you to scale your business further to meet growing demand.

Moreover, by using tools like ZAPTEST Enterprise, which offer innovative Software + Service models, and unlimited licence models, you are guaranteed to close both your technical knowledge gap, and keep your costs fixed regardless of how fast you grow, and how much you use the tools.

System testing checklist, tips, and tricks

Before you start system testing, run through the system testing checklist below and follow these tips to optimize your system testing for accuracy, efficiency, and coverage.

A system testing checklist can help to ensure that you’ve covered everything you need as you progress through system testing.

1. Involve testers during the design phase

While testers don’t usually work on software until the development and design phase is finished, by involving testers early on it’s easier for testers to understand how different components work together and factor this into their testing.

This often results in more insightful exploratory testing.

2. Write clear test cases

When you write your test cases, make sure that they’re clear and unambiguous.

Testers should be able to read test cases and immediately understand what needs to be tested and how to test it.

If you need to, explain where to find the feature that requires testing and which steps to take during the system testing process.

3. Maximize test coverage

It’s not usually possible to achieve 100% testing coverage when you carry out system testing, even if you do use automation tools.

However, the greater your test coverage the more likely you are to identify and fix bugs before release.

Try to achieve a test coverage of at least 90% or as close to this as possible.

4. Analyze results thoroughly

Analyze the results of each system test thoroughly, and report bugs and defects clearly in your documentation.

The more details that you can provide about bugs, the easier it will be for developers to replicate those bugs later.

If you have ideas about why the bugs are occurring and how the bugs may be fixed, include these in your test results.

5. Go beyond requirement testing

Don’t just test your applications to see if they do what they are supposed to do.

Test how your software works beyond its requirements to see how it responds to tasks and operations outside intended use. This could help you to identify bugs and defects that you would otherwise miss.

7 mistakes and pitfalls to avoid when implementing system tests

When implementing system tests for the first time, it’s important to be aware of common mistakes and pitfalls that testing teams often make.

Knowing what these mistakes are will make it easy to avoid making them which should increase the effectiveness and accuracy of your own system testing.

1. Starting without a testing plan

It’s important to create a detailed testing plan before you start system testing.

If you start integration testing without a plan in place, it’s easy to forget some of the test cases that you’re intending to execute or test cases outside of the testing plan.

Most people can’t remember the full details of a testing plan unless it’s clearly documented, and it also prevents teams from passing it over to other testers.

2. Not defining the scope of system testing

System testing is a multi-dimensional task that involves the testing of many different aspects of a single software build.

Depending upon the type of software you’re developing and what you’ve tested so far, the scope of system testing can vary hugely between tests.

It’s important to define the scope of testing before testing begins and to ensure that this scope is understood by all members of the testing team.

3. Ignoring false positive and false negative results

False positive results happen when system tests pass despite test scenarios not actually working as expected.

Likewise, false negatives can occur when a test fails despite working as expected.

Sometimes it can be difficult to spot false positives and false negatives, especially if you simply look at the test results without delving into the actual outputs of the test. False positives and negatives are particularly likely and easy to miss when conducting automated system testing.

4. Testing with similar types of test data

If you’re using multiple different types of test data, varying the attributes of the test data that you use as much as possible will increase the coverage of your system testing.

This means you’re less likely to miss bugs and defects and adds value to the testing that you carry out.

By covering different types of test data, you’ll gain a more detailed picture of how the product will behave after release.

5. Ignoring exploratory testing

While following the test plan is important, it’s also important to make space for exploratory testing and to allow testers to try out different features and functions as they find them during testing.

Exploratory testing can often reveal new bugs that would otherwise be missed or bugs that have already been missed during other phases of testing.

You can even schedule exploratory testing sessions by organizing test jam sessions where testers all carry out unplanned system testing for a set period of time.

6. Not reviewing test automation results regularly

If you’re new to software system testing and, in particular, automated testing, you might think that you can just set the test running and leave it.

But it’s important to review test automation results regularly and make changes to the test automation code where necessary.

For example, if you make any changes to the software that you’re testing, these should be reflected in the code of automated tests.

Read automated test results carefully to understand every output of the test, and not just the pass/fail results.

7. Using the wrong automation tool

There are lots of automation tools available today, some of which are free to use and others which users have to pay a monthly fee for.

While beginners usually opt for open-source tools, it’s important to make sure that the tool you choose to use suits your requirements and offers the features that you need it to.

For example, open source tools are notoriously well known for their limited functionality, non-intuitive UI, and very difficult learning curve, By contrast, full-stack testing tools like ZAPTEST Free Edition, provide top end testing and RPA functionality like 1SCRIPT, Cross Browser, Cross Device, Cross Platform Technology, in an easy to use codeless interface, suitable both for non-technical and experienced testers.

And, sometimes it’s worth investing in a slightly more expensive, enterprise level automation tool if the functionality that it offers is a much better fit for your project.

Conclusion

System testing is an important stage of software testing that checks the system as a whole and makes sure that every individual component works in unison smoothly and efficiently.

It’s the stage of software testing that comes after integration testing and before user acceptance testing, and it’s one of the last formal software testing stages that happens before initial release.

System testing allows testers to identify different kinds of bugs including functional and non-functional errors, as well as usability errors and configuration defects.

It’s possible to perform system testing manually or to automate system testing, although in most cases it’s recommended to take a hybrid approach to maximize efficiency while still making space for exploratory testing.

By following best practices and avoiding the common pitfalls of system testing, testing teams can carry out accurate, effective system tests that cover most key areas of the build.

FAQs and resources

If you’re new to system testing, there are lots of resources online that can help you to learn more about system testing and how to carry out system tests.

Below are details of some of the useful online system testing resources as well as answers to some of the most frequently asked questions about system tests.

1. The best courses on system testing

Taking online courses in system testing or software testing can help QA professionals to develop their understanding of system testing and earn qualifications that demonstrate that knowledge.

Online training sites like Coursera, Udemy, edX and Pluralsight offer free and paid courses in software testing and automation for professionals and beginners.

Some examples of online courses in system testing are:

- The Complete 2023 Software Testing Bootcamp, Udemy

- Software Testing and Automation Specialization, Coursera

- Automated Software Testing, edX

- Automated Software Testing with Python, Udemy

- Business Analyst: Software Testing Processes and Techniques, Udemy

Look for online courses that match your experience level and suit your budget. If you work in QA, you may be able to ask your employer to sponsor you to take an accredited course in software testing.

2. What are the top 5 interview questions on system testing?

If you’re preparing for an interview for a role that might involve system testing or other types of software testing, preparing answers for common interview questions in advance could help your performance at your interview.

Some of the most common interview questions on system testing include:

- How does system testing differ from integration testing?

- What are the pros and cons of automated system testing?

- How many types of system testing can you name?

- How would you maximize test coverage during system testing?

- What kind of bugs and defects would you expect to find in system tests?

You can use these questions to prepare answers following the STAR structure in advance of your interview, using past examples from your career to demonstrate your knowledge of system testing and other types of software testing.

3. The best YouTube tutorials on system testing

If you’re a visual learner, you may find it easier to understand what system testing is and how it works alongside other types of software testing by watching videos on system testing.

There are lots of tutorial videos on YouTube that explain what system testing is and how to begin system testing whether you want to perform it manually or using automation tools. Some of the best YouTube tutorials on system testing include:

- What is System Testing?

- Acceptance testing and System Testing

- What is System Testing and How Does it Work?

- System Integration Testing with a Real Time Example

- What is System Testing in Software Testing?

4. How to maintain system tests

Test maintenance is the process of adapting and maintaining system tests and other kinds of software tests to keep them up to date as you make changes to a software build or change the code.

For example, if you carry out system testing and find bugs and defects, you’ll send the software build back to the developers for adjustments. Testing teams may then have to maintain test scripts to make sure that they adequately test the new software build when it’s time to test again.

Test maintenance is an important aspect of software testing, and testers can ensure that they keep software maintained by following maintenance best practices.

These include:

1. Collaboration:

Developers and testers should collaborate together to ensure that testers know which aspects of code have been changed and how this might affect test scripts.

2. Design:

Design test scripts before you start automating tests. This ensures that the tests that you do automate are always fit for purpose.

3. Process:

Take software testing maintenance into consideration during the design process. Remember that you’ll have to maintain tests and factor this into scheduling, test plans, and test design.

4. Convenience:

Update all your tests, including system tests and sanity tests, from a single dashboard if possible.

This means that updating tests is much faster and more convenient, and it minimizes the risk of forgetting to update a particular test when changes have been made to the software build.

Is system testing white box or black box testing?

System testing is a form of black-box testing.

Black box testing differs from white box testing in that it considers only the external functions and features of the software. White box testing tests how the software runs internally, for example how the code functions and works together.

Black box testing doesn’t require knowledge of the internal workings of the system or of the code, instead simply requiring that testers test the outputs and functions of the software application and assess these against set criteria.

System testing does involve both functional and non-functional testing, but testers use a black box technique to test even non-functional aspects of the build.

For this reason, system testing is generally considered to be a form of black-box testing.